One of many greatest pains of working with statically generated web sites is the incrementally slower builds as your app grows. That is an inevitable drawback any stack faces sooner or later and it might strike from completely different factors relying on what sort of product you’re working with.

For instance, in case your app has a number of pages (views, routes) when producing the deployment artifact, every of these routes turns into a file. Then, when you’ve reached hundreds, you begin questioning when you’ll be able to deploy while not having to plan forward. This state of affairs is widespread on e-commerce platforms or blogs, that are already an enormous portion of the net however not all of it. Routes usually are not the one doable bottleneck, although.

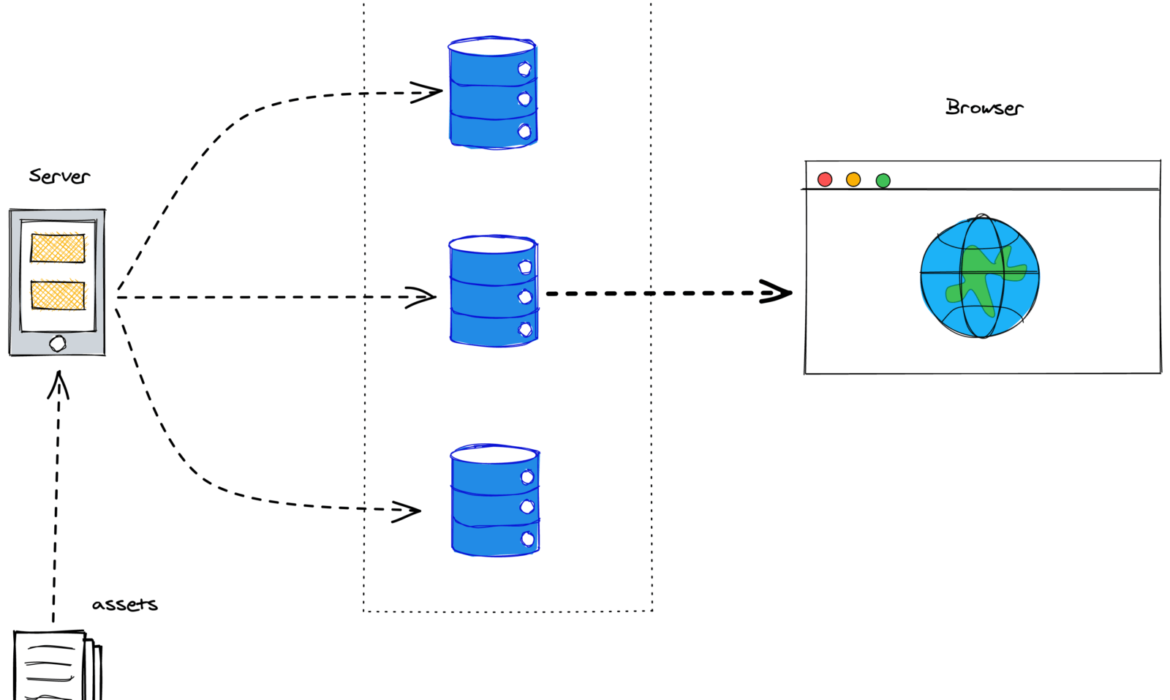

A resource-heavy app will even finally attain this turning level. Many static mills perform asset optimization to make sure the very best consumer expertise. With out construct optimizations (incremental builds, caching, we’ll get to these quickly) it will finally develop into unmanageable as properly — take into consideration going via all photographs in an internet site: resizing, deleting, and/or creating new recordsdata again and again. And as soon as all that’s accomplished: keep in mind Jamstack serves our apps from the perimeters of the Content material Supply Community. So we nonetheless want to maneuver issues from the server they have been compiled at to the perimeters of the community.

On high of all that, there’s additionally one other truth: information is usually dynamic, that means that after we construct our app and deploy it, it might take a couple of seconds, a couple of minutes, and even an hour. In the meantime, the world retains spinning, and if we’re fetching information from elsewhere, our app is sure to get outdated. Unacceptable! Construct once more to replace!

Construct As soon as, Replace When Wanted

Fixing Cumbersome Builds has been high of thoughts for mainly each Jamstack platform, framework, or service for some time. Many options revolve round incremental builds. In observe, because of this builds can be as cumbersome because the variations they carry towards the present deployment.

Defining a diff algorithm isn’t any simple job although. For the end-user to truly profit from this enchancment there are cache invalidation methods that have to be thought-about. Lengthy story quick: we don’t wish to invalidate cache for a web page or an asset that has not modified.

Subsequent.js got here up with Incremental Static Regeneration (ISR). In essence, it’s a technique to declare for every route how typically we wish it to rebuild. Underneath the hood, it simplifies a variety of the work to the server-side. As a result of each route (dynamic or not) will rebuild itself given a particular timeframe, and it simply matches completely within the Jamstack axiom of invalidating cache on each construct. Consider it because the max-age header however for routes in your Subsequent.js app.

To get your software began, ISR only a configuration property away. In your route part (contained in the /pages listing) go to your getStaticProps methodology and add the revalidate key to the return object:

export async perform getStaticProps() {

const { restrict, depend, pokemons } = await fetchPokemonList()

return {

props: {

restrict,

depend,

pokemons,

},

revalidate: 3600 // seconds

}

}

The above snippet will make sure that my web page rebuilds each hour and fetch for extra Pokémon to show.

We nonetheless get the bulk-builds now and again (when issuing a brand new deployment). However this enables us to decouple content material from code, by transferring content material to a Content material Administration System (CMS) we are able to replace info in a couple of seconds, no matter how massive our software is. Goodbye to webhooks for updating typos!

On-Demand Builders

Netlify just lately launched On-Demand Builders which is their strategy to supporting ISR for Subsequent.js, but in addition works throughout frameworks together with Eleventy and Nuxt. Within the earlier session, we established that ISR was an important step towards shorter build-times and addressed a good portion of the use-cases. Nonetheless, the caveats have been there:

Full builds upon steady deployment.

The incremental stage occurs solely after the deployment and for the info. It’s not doable to ship code incrementally

Incremental builds are a product of time.

The cache is invalidated on a time foundation. So pointless builds might happen, or wanted updates might take longer relying on the revalidation interval set within the code.

Netlify’s new deployment infrastructure permits builders to create logic to find out what items of their app will construct on deployment and what items can be deferred (and how they are going to be deferred).

Important

No motion is required. The whole lot you deploy can be constructed upon push.

Deferred

A particular piece of the app is not going to be constructed upon deploy, it will likely be deferred to be constructed on-demand every time the primary request happens, then it will likely be cached as some other useful resource of its sort.

Creating an On-Demand builder

To begin with, add a netlify/capabilities package deal as a devDependency to your challenge:

yarn add -D @netlify/capabilities

As soon as that’s accomplished, it’s simply the identical as creating a brand new Netlify Perform. When you have not set a particular listing for them, head on to netlify/capabilities/ and create a file of any identify to your builder.

import sort { Handler } from ‘@netlify/capabilities’

import { builder } from ‘@netlify/capabilities’

const myHandler: Handler = async (occasion, context) => {

return {

statusCode: 200,

physique: JSON.stringify({ message: ‘Constructed on-demand! 🎉’ }),

}

}

export const handler = builder(myHandler)

As you’ll be able to see from the snippet above, the on-demand builder splits other than a daily Netlify Perform as a result of it wraps its handler inside a builder() methodology. This methodology connects our perform to the construct duties. And that’s all it’s good to have a bit of your software deferred for constructing solely when needed. Small incremental builds from the get-go!

Subsequent.js On Netlify

To construct a Subsequent.js app on Netlify there are 2 necessary plugins that one ought to add to have a greater expertise normally: Netlify Plugin Cache Subsequent.js and Important Subsequent-on-Netlify. The previous caches your NextJS extra effectively and it’s good to add it your self, whereas the latter makes a couple of slight changes to how Subsequent.js structure is constructed so it higher matches Netlify’s and is offered by default to each new challenge that Netlify can establish is utilizing Subsequent.js.

On-Demand Builders With Subsequent.js

Constructing efficiency, deploy efficiency, caching, developer expertise. These are all crucial subjects, however it’s a lot — and takes time to arrange correctly. Then we get to that previous dialogue about specializing in Developer Expertise as a substitute of Person Expertise. Which is the time issues go to a hidden spot in a backlog to be forgotten. Not likely.

Netlify has acquired your again. In just some steps, we are able to leverage the complete energy of the Jamstack in our Subsequent.js app. It is time to roll up our sleeves and put all of it collectively now.

Defining Pre-Rendered Paths

When you have labored with static era inside Subsequent.js earlier than, you’ve gotten in all probability heard of getStaticPaths methodology. This methodology is meant for dynamic routes (web page templates that can render a variety of pages).

With out dwelling an excessive amount of on the intricacies of this methodology, you will need to be aware the return sort is an object with 2 keys, like in our Proof-of-Idea this can be [Pokémon]dynamic route file:

export async perform getStaticPaths() {

return {

paths: [],

fallback: ‘blocking’,

}

}

paths is an array finishing up all paths matching this route which can be pre-rendered

fallback has 3 doable values: blocking, true, or false

In our case, our getStaticPaths is figuring out:

No paths can be pre-rendered;

Each time this route known as, we is not going to serve a fallback template, we’ll render the web page on-demand and hold the consumer ready, blocking the app from doing anything.

When utilizing On-Demand Builders, make sure that your fallback technique meets your app’s targets, the official Subsequent.js docs: fallback docs are very helpful.

Earlier than On-Demand Builders, our getStaticPaths was barely completely different:

export async perform getStaticPaths() {

const { pokemons } = await fetchPkmList()

return {

paths: pokemons.map(({ identify }) => ({ params: { pokemon: identify } })),

fallback: false,

}

}

We have been gathering a listing of all pokémon pages we meant to have, map all of the pokemon objects to only a string with the pokémon identify, and forwarding returning the { params } object carrying it to getStaticProps. Our fallback was set to false as a result of if a route was not a match, we wished Subsequent.js to throw a 404: Not Discovered web page.

You may verify each variations deployed to Netlify:

With On-Demand Builder: code, reside

Absolutely static generated: code, reside

The code can be open-sourced on Github and you’ll simply deploy it your self to verify the construct occasions. And with this queue, we slide onto our subsequent subject.

Construct Occasions

As talked about above, the earlier demo is definitely a Proof-of-Idea, nothing is basically good or dangerous if we can’t measure. For our little examine, I went over to the PokéAPI and determined to catch all pokémons.

For reproducibility functions, I capped our request (to 1000). These usually are not actually all throughout the API, however it enforces the variety of pages would be the similar for all builds regardless if issues get up to date at any cut-off date.

export const fetchPkmList = async () => {

const resp = await fetch(`${API}pokemon?restrict=${LIMIT}`)

const {

depend,

outcomes,

}: {

depend: quantity

outcomes: {

identify: string

url: string

}[]

} = await resp.json()

return {

depend,

pokemons: outcomes,

restrict: LIMIT,

}

}

After which fired each variations in separated branches to Netlify, due to preview deploys they will coexist in mainly the identical setting. To essentially consider the distinction between each strategies the ODB strategy was excessive, no pages have been pre-rendered for that dynamic route. Although not advisable for real-world eventualities (you’ll want to pre-render your traffic-heavy routes), it marks clearly the vary of build-time efficiency enchancment we are able to obtain with this strategy.

Technique

Variety of Pages

Variety of Belongings

Construct time

Whole deploy time

Absolutely Static Generated

1002

1005

2 minutes 32 seconds

4 minutes 15 seconds

On-Demand Builders

2

0

52 seconds

52 seconds

The pages in our little PokéDex app are fairly small, the picture belongings are very lean, however the beneficial properties on deploy time are very important. If an app has a medium to a considerable amount of routes, it’s positively value contemplating the ODB technique.

It makes your deploys sooner and thus extra dependable. The efficiency hit solely occurs on the very first request, from the next request and onward the rendered web page can be cached proper on the Edge making the efficiency precisely the identical because the Absolutely Static Generated.

The Future: Distributed Persistent Rendering

On the exact same day, On-Demand Builders have been introduced and placed on early entry, Netlify additionally printed their Request for Feedback on Distributed Persistent Rendering (DPR).

DPR is the subsequent step for On-Demand Builders. It capitalizes on sooner builds by making use of such asynchronous constructing steps after which caching the belongings till they’re really up to date. No extra full-builds for a 10k web page’s web site. DPR empowers the builders to a full management across the construct and deploy methods via strong caching and utilizing On-Demand Builders.

Image this state of affairs: an e-commerce web site has 10k product pages, this implies it will take one thing round 2 hours to construct your complete software for deployment. We don’t must argue how painful that is.

With DPR, we are able to set the highest 500 pages to construct on each deploy. Our heaviest site visitors pages are all the time prepared for our customers. However, we’re a store, i.e. each second counts. So for the opposite 9500 pages, we are able to set a post-build hook to set off their builders — deploying the remaining of our pages asynchronously and instantly caching. No customers have been damage, our web site was up to date with the quickest construct doable, and all the things else that didn’t exist in cache was then saved.

Conclusion

Though most of the dialogue factors on this article have been conceptual and the implementation is to be outlined, I’m enthusiastic about the way forward for the Jamstack. The advances we’re doing as a group revolve across the end-user expertise.

What’s your tackle Distributed Persistent Rendering? Have you ever tried out On-Demand Builders in your software? Let me know extra within the feedback or name me out on Twitter. I’m actually curious!

References

“A Full Information To Incremental Static Regeneration (ISR) With Subsequent.js,” Lee Robinson

“Sooner Builds For Massive Websites On Netlify With On-Demand Builders,” Asavari Tayal, Netlify Weblog

“Distributed Persistent Rendering: A New Jamstack Method For Sooner Builds,” Matt Biilmann, Netlify Weblog

“Distributed Persistent Rendering (DPR),” Cassidy Williams, GitHub

Subscribe to MarketingSolution.

Receive web development discounts & web design tutorials.

Now! Lets GROW Together!